The final week of NASA frontier development lab is always intense. After seven weeks of hard work the projects achieve rapid success, data sets are produced, first prototypes are running and the neural networks are learning. In this situation most of the participants just want to stick with their screens and continue working night-shifts as they did in the weeks before. However, the groups have to switch gears and focus on their technical reviews, writing NASA reports, and the final event presentations. And therefore also time for the Explainables. Fortunately, we were really lucky: not only did we end up with a group of already super talented communicators, they also jumped right into our exercises instead of their computer screens.

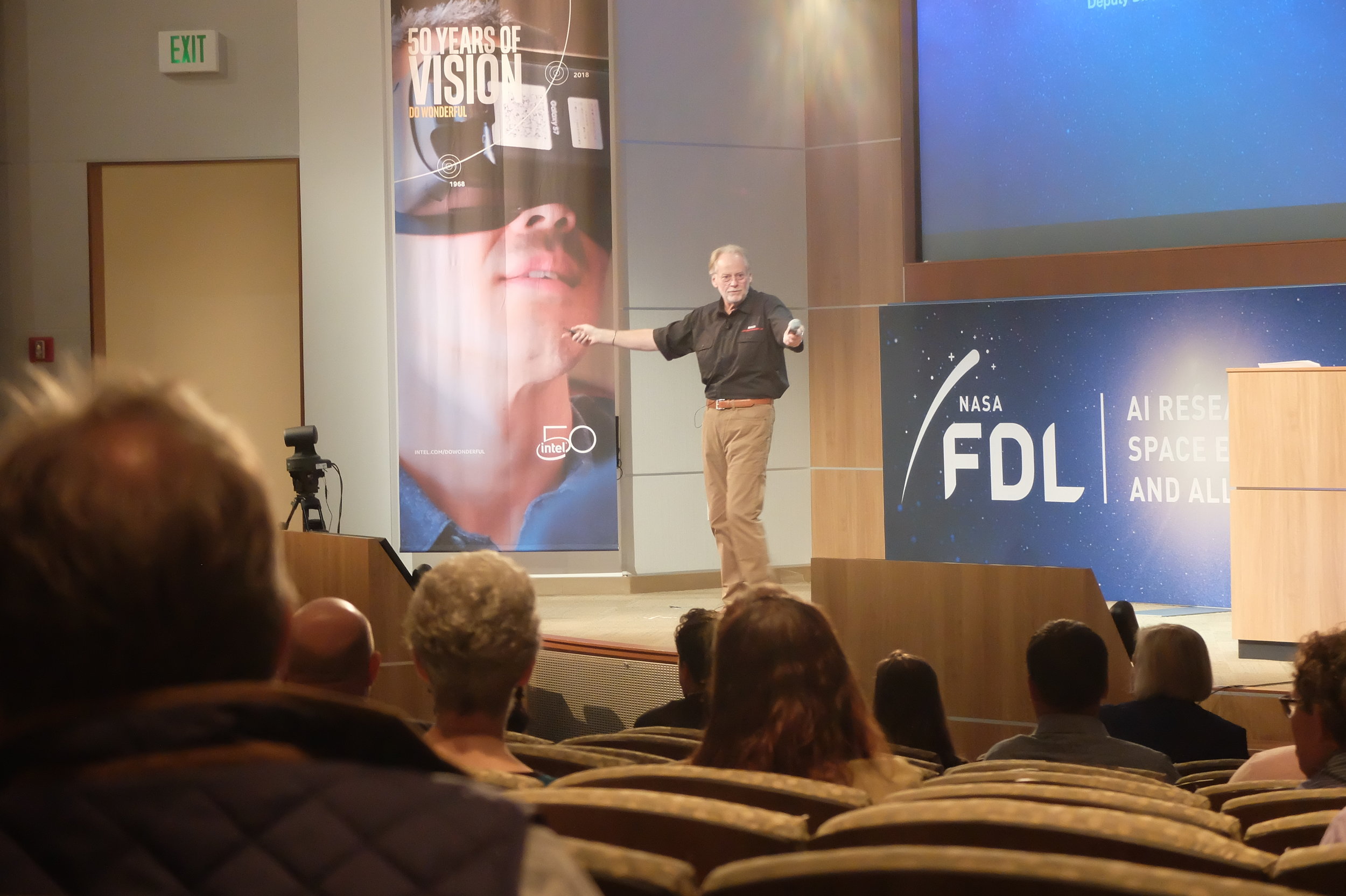

The final day of presentations started around 9 am at the Intel auditorium. The presenters used the lessons they learned from the Explainables workshops to design interactive, clear and concise talks. However, several of the participants were nervous and anxious. So we relied on warm up exercises from our facilitators: Amran used his Sepaktakraw coach expertise on stretches and moves for a body warm-up, followed by Sally's opera-approved breathing and vocal warm-up. This was followed by a grand rehearsal of all speakers and talks.

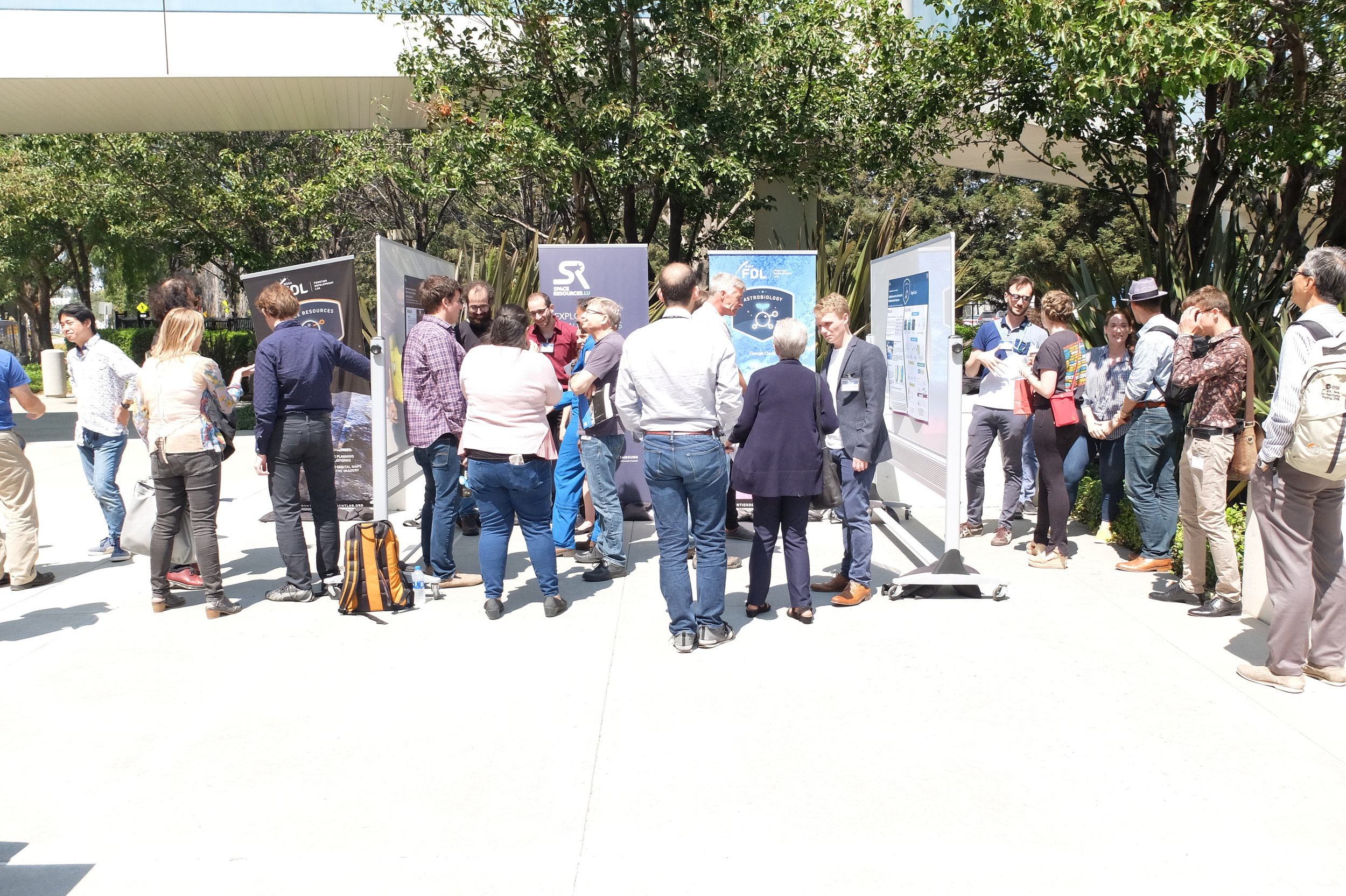

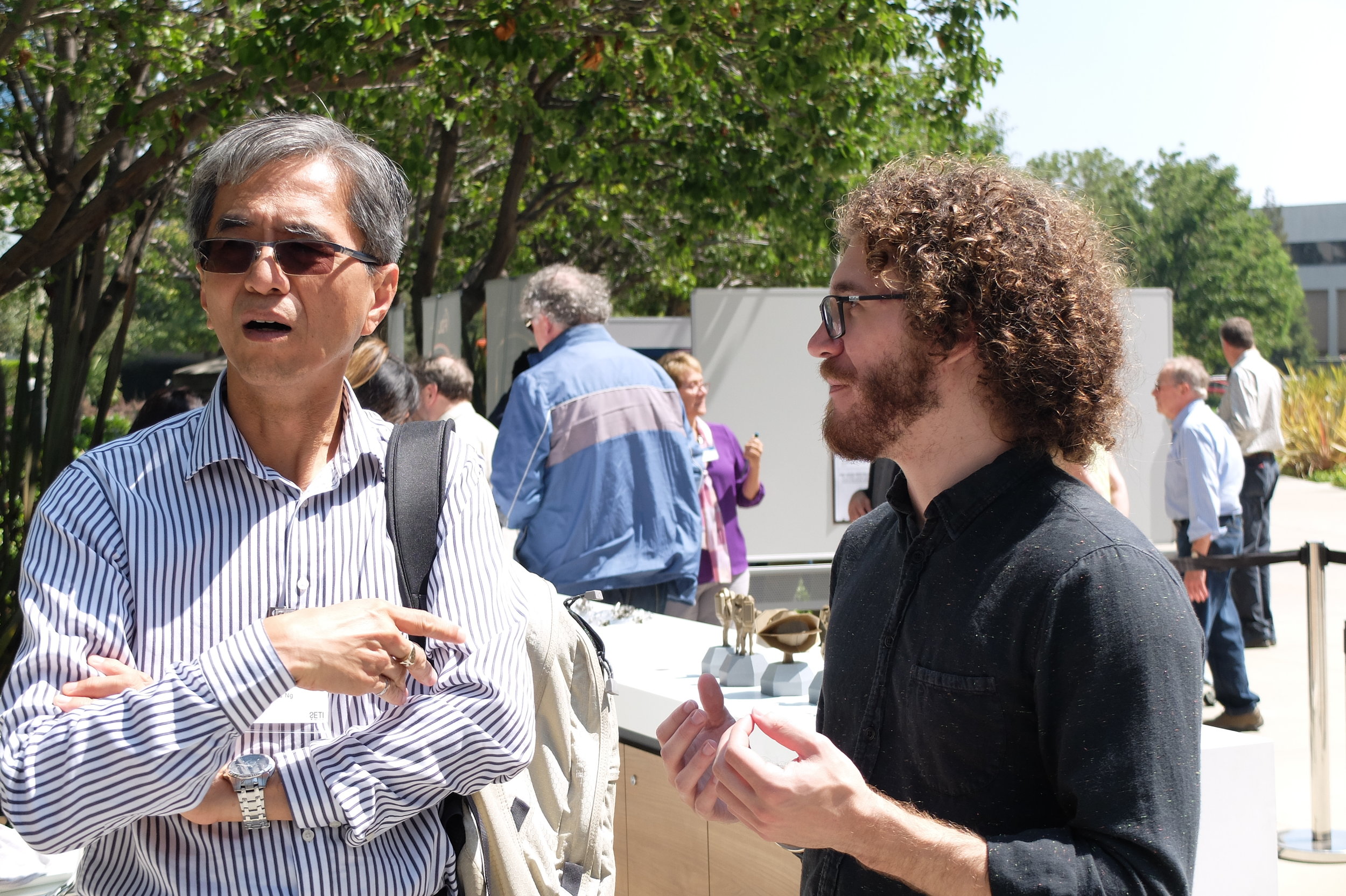

After a few minor adjustments, and a rather chaotic technical test, some quick lunch and a poster presentation session the final highlight started: the FDL Event Horizon Event.

A recorded video of the final event including the presentations can be found here (FDL teams starting at 48:00) and we will feature the individual talks here again as soon as they will be available.

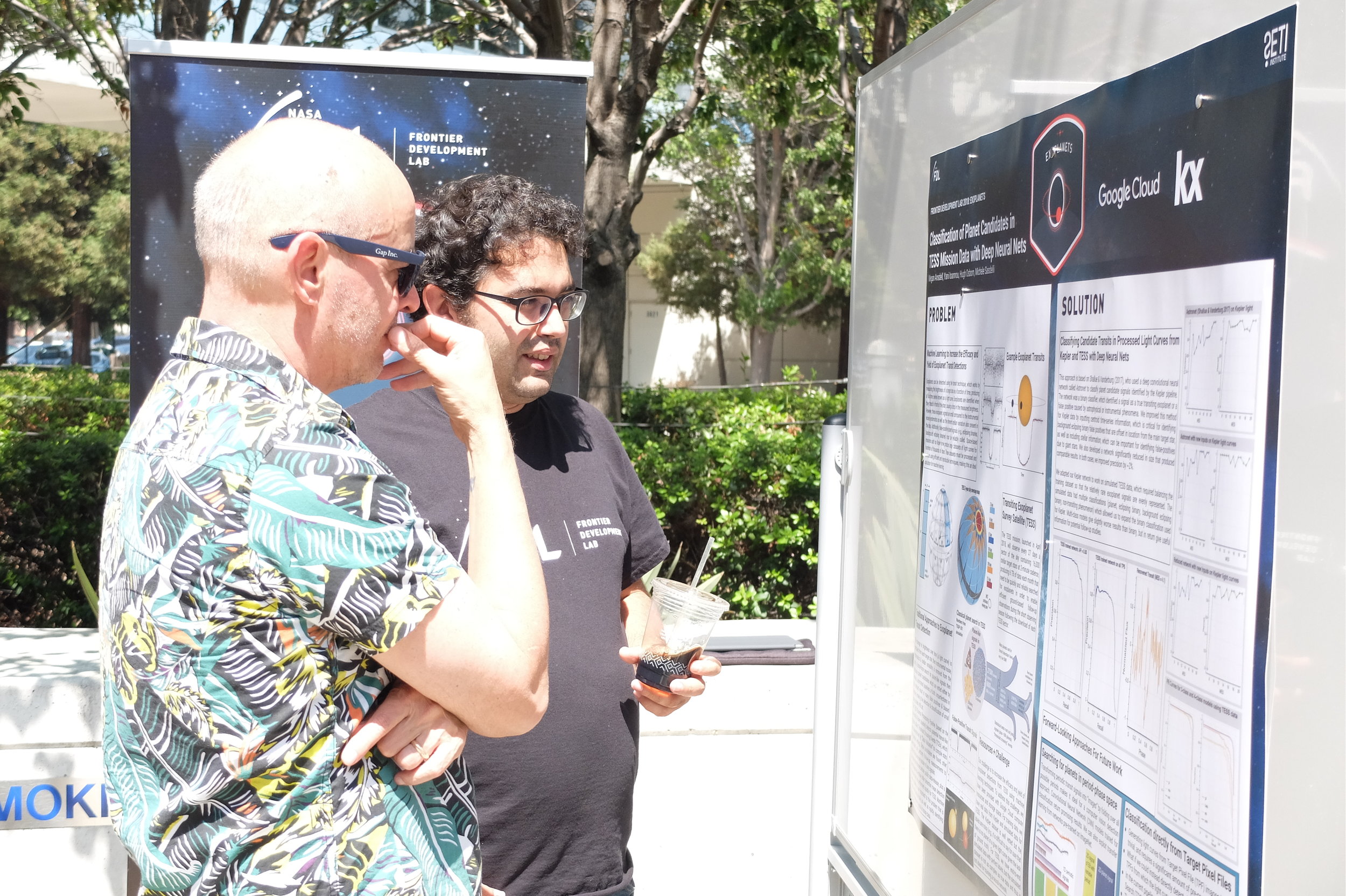

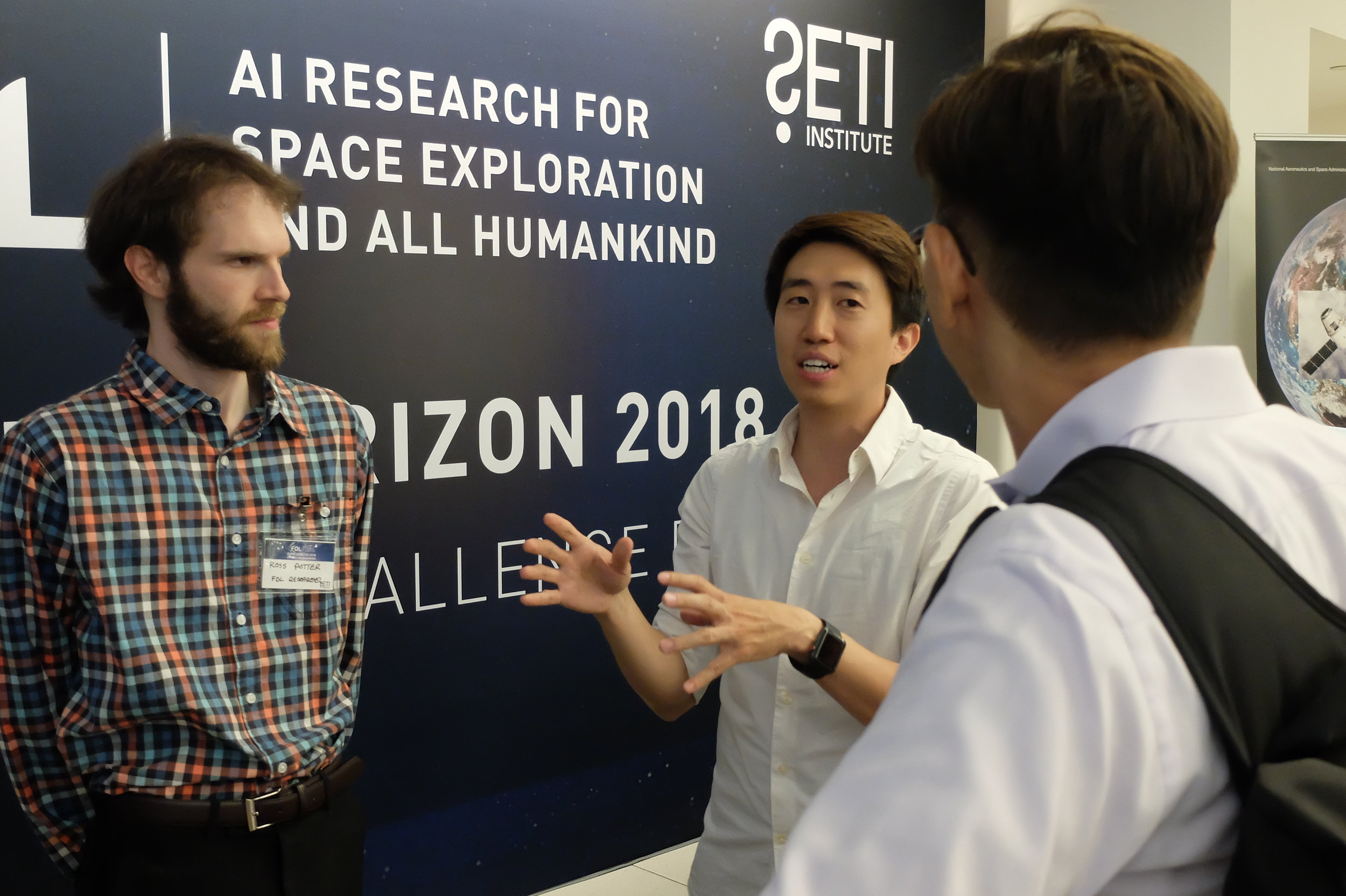

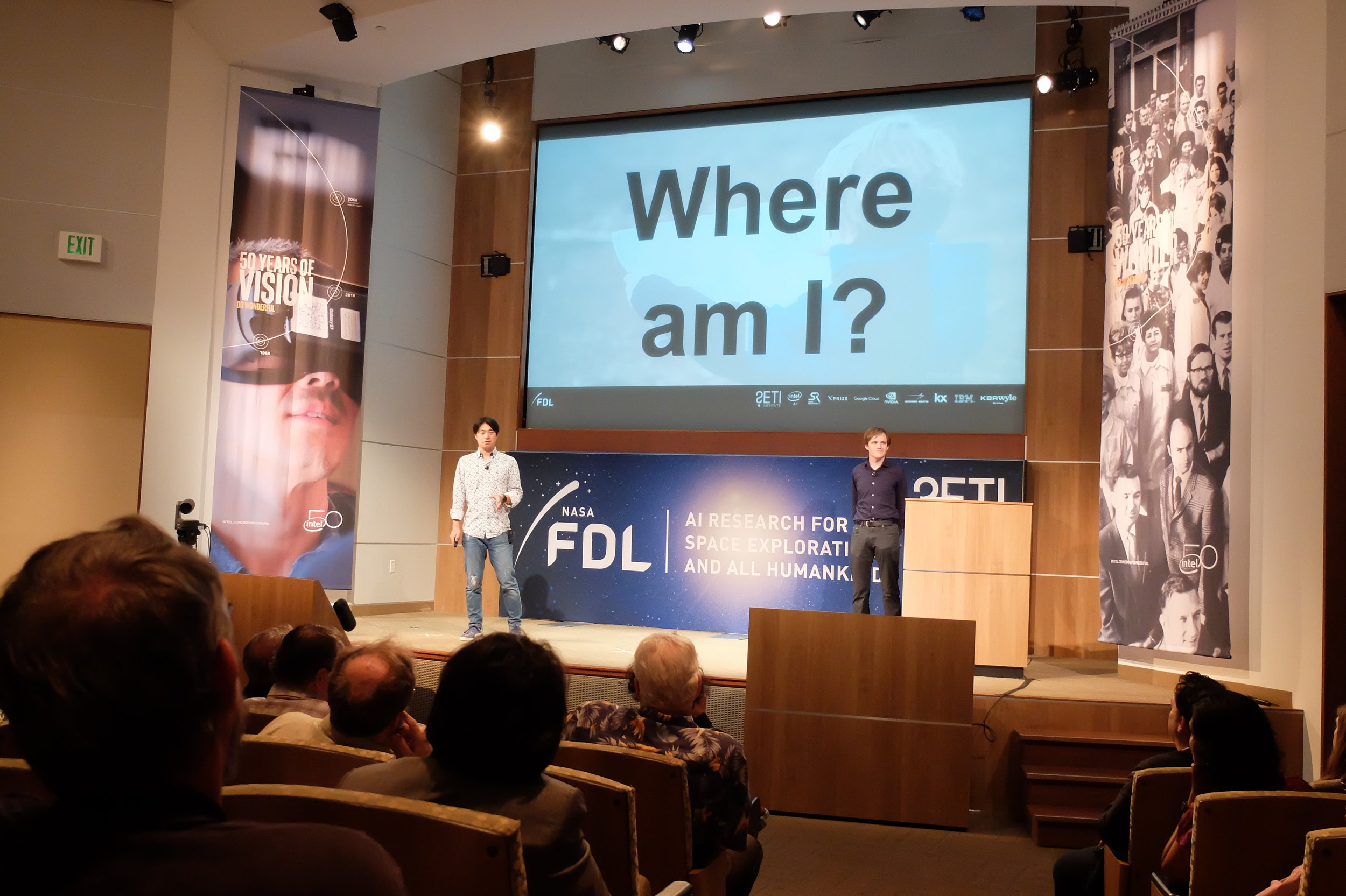

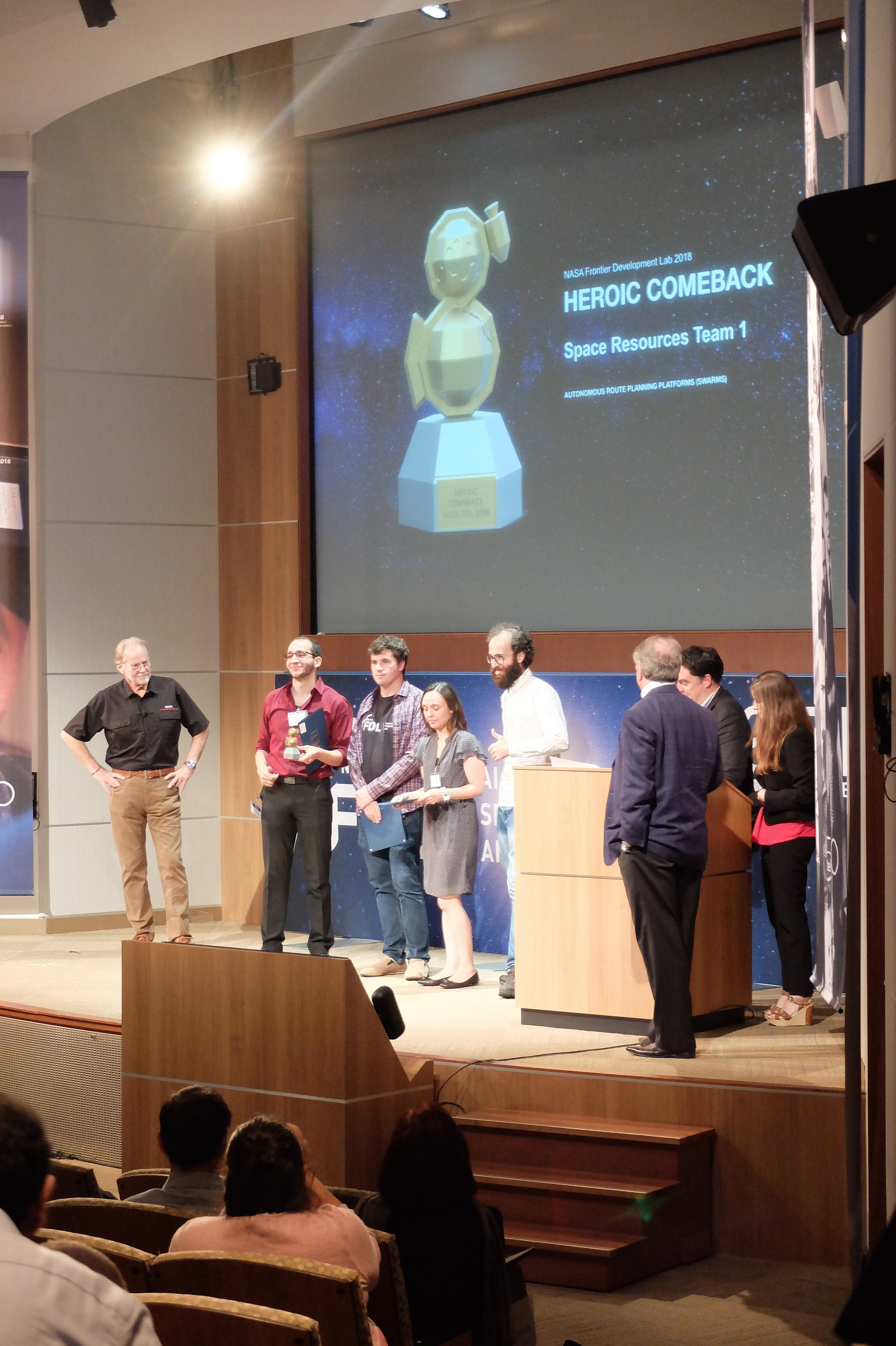

Andrew and Philippe for the Space Ressources 2 team (also Ben and Ross) started the afternoon explaining their work on localization on the moon with cats and dogs (see title image and gallery below). After that Zahi and Francisco took the stage for Space Ressources 1 (also Ana and Drew), talking about their challenge on autonomous route planning on the Moon or Mars. Then the two Space Weather teams took over. First Laura and Karthick for SW1 (also Danny and Kibrom) showed us how they can predict GPS disturbances caused by solar activity and therefore help you avoid driving your car over a cliff (and other more realistic dangers). Then Richard explained how his team SW2 (also Rajat, Paul and Alex) "revived" a dead instrument on a solar observatory using artificial intelligence. After that Hugh for the Exoplanet team (also Megan, Michele and Yanni) showed how their AI was able to learn from Kepler data to eventually find even more interesting alien worlds from the upcoming TESS mission.

Hugh's final sentence (maybe inspired by our Explainables `first lines, last lines' exercise?)

"When we get the first detection of biosignatures on a transiting exoplanet, it's not a stretch to think that it will have been first looked at and classified by an AI rather than a human."

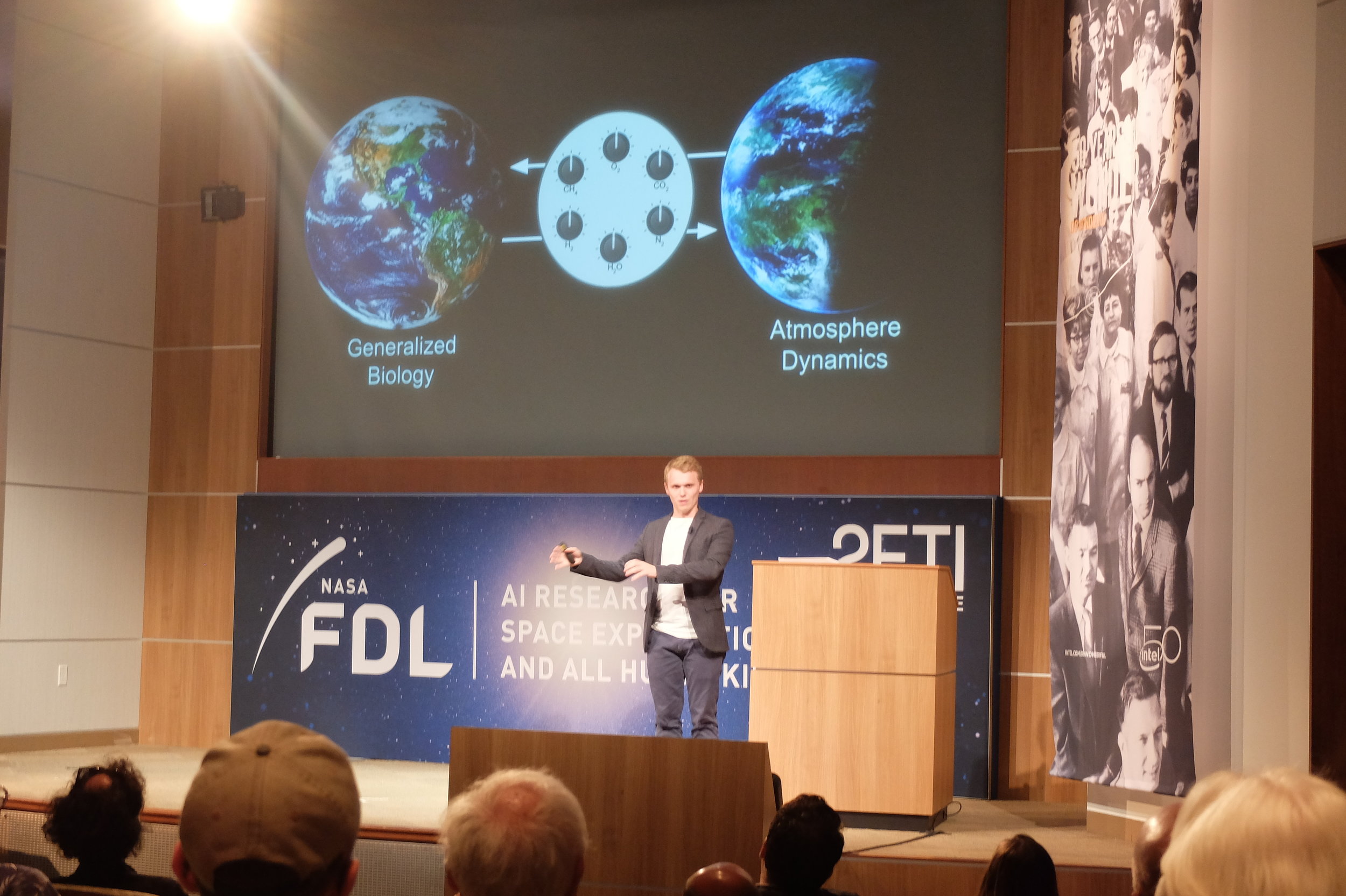

got then picked up by the two Astrobiology teams: First Michael for AB2 (also Molly, Simone and Frank) explained how they first generated 3 million spectra of rocky extrasolar planets and then used a neural network to retrieve their composition - a crucial task in the future of exoplanet exploration. The evening was then concluded by Adi and Will for AB1 (also Rodd and Aaron) who started their talk by asking Google "Are we alone in the Universe?". Not satisfied by the answer they then explained how they used the hardware, software and brainpower provided to FDL by Google to model how life not only influences but maybe even controls planetary atmospheres. Again we can not recommend enough that you should watch all their amazing presentation.